Keyboard shortcuts:

N/СпейсNext Slide

PPrevious Slide

OSlides Overview

ctrl+left clickZoom Element

If you want print version => add '

?print-pdf' at the end of slides URL (remove '#' fragment) and then print.

Like: https://wwwcourses.github.io/...CourseIntro.html?print-pdf

Parallel programming - Overview. Threads.

Created for

Iva E. Popova, 2022-2023,

Basic Concepts

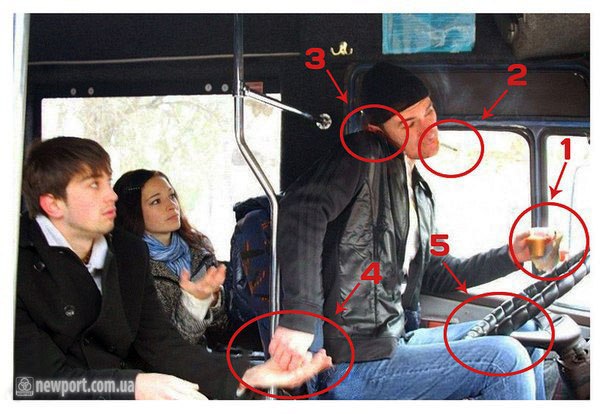

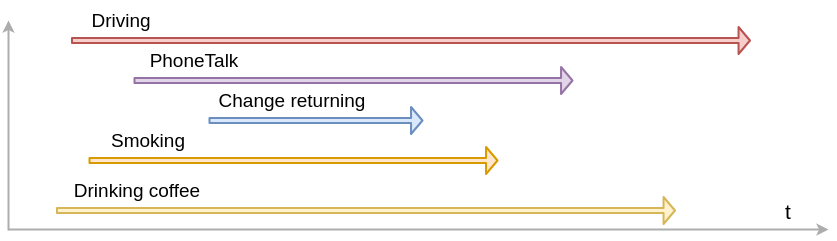

Multitasking

- Multitasking is the ability of an operating system or application to execute multiple tasks (processes) simultaneously.

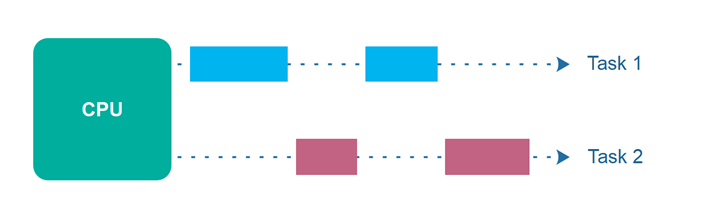

Concurrency vs Parallelism

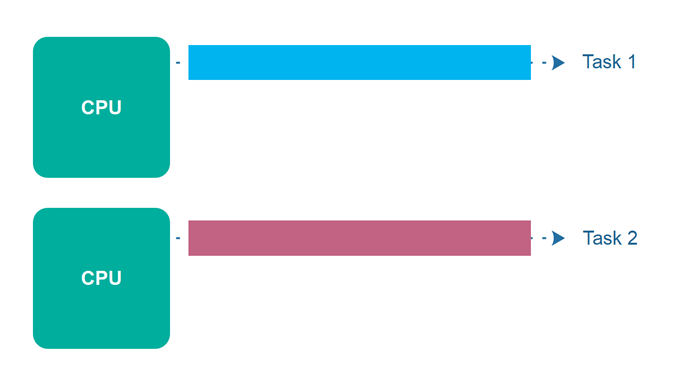

- Parallel execution: multiple tasks are executed simultaneously by leveraging multiple processors or CPU cores.

- Concurrent Execution: refers to the execution of multiple instruction sequences at the same time. It is more about dealing with multiple tasks making progress without necessarily running simultaneously

- Analogy: juggling 2 balls with 1 hand vs juggling 1 ball in each hand.

Preliminary: CPU, Cores, Threads, Processes

Preliminary: CPU, Cores, Threads, Processes

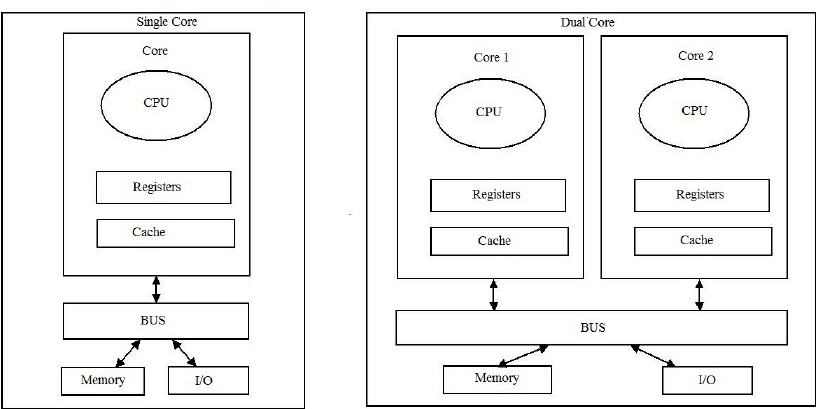

- CPU (Central Processing Unit): the main processor in a computer that executes instructions and performs calculations, acting as the brain of the computer.

- Core: an individual processing unit within a CPU capable of executing its own instructions. Modern CPUs can have multiple cores, allowing for parallel processing.

- Hardware threads: logical processors created by technologies like Simultaneous Multithreading (SMT) or Hyper-Threading, allowing a single core to handle multiple instruction streams concurrently.

- The operating system treats each hardware thread as a separate processor. For example, a quad-core CPU with Hyper-Threading will appear as eight logical processors to the OS.

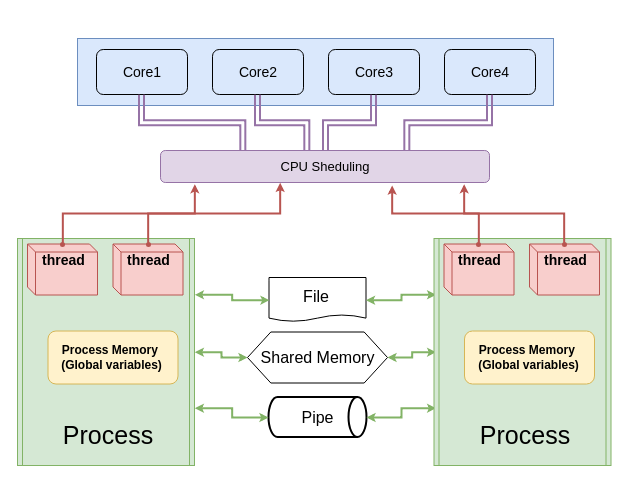

- Process:An independent program in execution, with its own memory space and resources managed by the operating system.

- the operating system can switch between processes very quickly (context switching), giving the appearance that multiple processes are running simultaneously on a single hardware thread.

- Software threads, also known as user threads, are managed by the operating system or a threading library within an application. They can run on a single hardware thread or be distributed across multiple hardware threads.

- Multiple threads within a single process can execute concurrently, sharing the same memory space.

Von Neumann Architecture

Single-core vs Multi-core Processor

Check CPU details

- On MacOS/Linux Terminal:

- On Windows Find out how many cores your processor has @support.microsoft.com

- By Python Script:

- You must install psutil

lscpu | head -10

import psutil

# Get the number of physical cores

physical_cores = psutil.cpu_count(logical=False)

print(f"Number of physical cores: {physical_cores}")

# Get the number of logical processors (hardware threads)

logical_processors = psutil.cpu_count(logical=True)

print(f"Number of logical processors (hardware threads): {logical_processors}")

Processes

- Processes are independent execution units that contain their own state information, use separate memory spaces, and communicate with each other through inter-process communication (IPC) mechanisms like pipes, sockets, or shared memory.

- Characteristics

- Isolation: Processes are isolated from each other. Memory in one process is not accessible to another.

- Robustness: A crash in one process does not affect other processes.

- Context Switching: More resource-intensive due to separate memory spaces.

- Concurrency and Parallelism: Ideal for CPU-bound tasks (1 ) as each process can run on a separate core or CPU.

1. CPU-bound task - a task that require significant CPU processing time, like complex calculations, data processing, image rendering. Performance is limited by the speed of the CPU.

Example in Python

import multiprocessing

import time

def worker(num):

print(f'Worker {num} starting')

# Simulate some work

time.sleep(2)

print(f'Worker {num} done')

if __name__ == '__main__':

start = time.time()

processes = []

for i in range(5):

p = multiprocessing.Process(target=worker, args=(i,))

processes.append(p)

p.start()

for p in processes:

p.join()

end = time.time()

print(f'time: {end-start}')

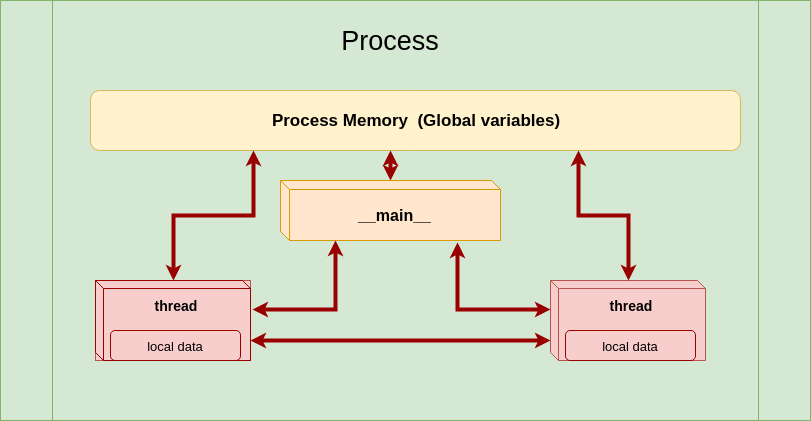

Threads

- Threads are lighter units of execution that share the same memory space within a single process. They can run concurrently but are limited by the Global Interpreter Lock (GIL) in CPython, which can prevent multiple threads from executing Python bytecodes simultaneously.

- A Thread lives in a Process. One Process can run multiple Threads.

- Characteristics:

- Shared Memory: Threads share the same memory space, making communication between threads easier and faster.

- Lightweight: Less resource-intensive than processes since they share memory.

- GIL Limitation: In CPython, the GIL ensures only one thread executes Python bytecode at a time, limiting true parallelism for CPU-bound tasks.

- Concurrency: Best suited for I/O-bound tasks (1 ) where the program spends time waiting for external events.

1. /O-bound task - a task that spend most of the time waiting for Input/Output operations, like reading/writing files, fetching data from a network, user input. Performance is limited by the speed of the I/O operations (e.g., disk access, network requests).

Example in Python

import threading

import time

def worker(num):

print(f'Worker {num} starting')

# Simulate some work

time.sleep(2)

print(f'Worker {num} done')

if __name__ == '__main__':

start = time.time()

threads = []

for i in range(5):

t = threading.Thread(target=worker, args=(i,))

threads.append(t)

t.start()

for t in threads:

t.join()

end = time.time()

print(f'time: {end-start}')

Threads vs Processes

Threads vs Processes

Threads vs Processes

- Memory:

- Processes: Separate memory space, more secure but with higher overhead.

- Threads: Shared memory space, less overhead but risk of data corruption due to concurrent access.

- Performance:

- Processes: Better for CPU-bound tasks due to true parallelism on multiple cores.

- Threads: Better for I/O-bound tasks; limited by GIL for CPU-bound tasks.

- Communication:

- Processes: Requires IPC mechanisms (pipes, queues, shared memory).

- Threads: Easier and faster through shared variables and data structures.

- Failure Isolation:

- Processes: A crash in one process does not affect others.

- Threads: A crash in one thread can affect the entire process.

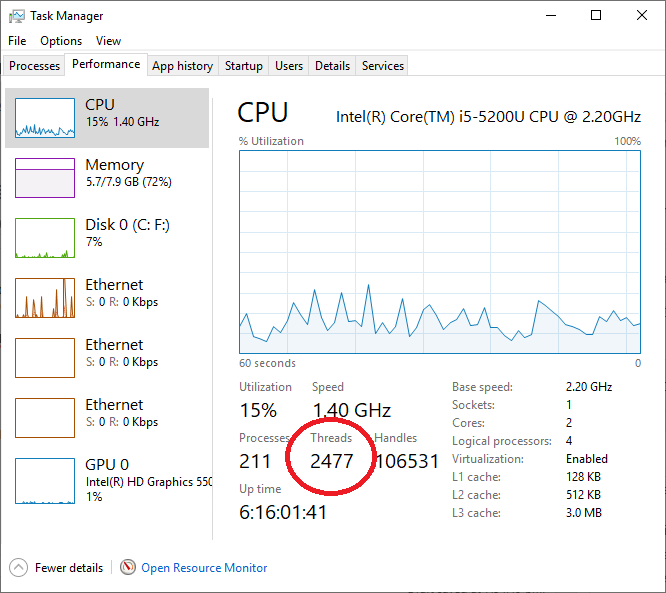

Check running process and threads - Windows

- You can view the number of current running process and threads by Task Manager:

- Or, you may use Process Explorer to see all the threads under a process.

Check running process and threads - Linux/MacOS

# view all processes:

top

# view all threads per process:

top -H -p <pid>

Thread-based parallelism

Thread-based parallelism

Multithreading in Python

- threading module is the preferred way in Python for thread-based "parallelism" (a note about GIL!)

- A thread is created by the

Threadclass constructor. - Once created, the thread could be started my

start()method - Other threads can call a thread’s

join()method. This blocks the calling thread until the thread whose join() method is called is terminated

Creating Thread objects

tr_obj = threading.Thread(target=None, name=None, args=(), kwargs={}, daemon=None)

target- function to be run in a threadnameis the thread name. By default, a unique name is constructed of the form "Thread-N" where N is a small decimal numberargsis the argument tuple for the target invocationkwargsis a dictionary of keyword arguments for the target invocationdaemon- if not None, a daemonic thread will be created.- A non-daemon thread blocks the main program to exit if they are not dead. Daemonic thread do not prevent the main program to exit, and will be killed by the main process when exiting.

Creating and running thread - example

import threading

import time

def worker(x):

tid = threading.currentThread().name;

print(f"Work started in thread {tid}")

time.sleep(5)

print(f"Work ended in thread {tid}")

# create the tread

tr = threading.Thread(target=worker, args=(42,))

# start the thread:

tr.start()

# wait until thread terminates:

tr.join()

print("Worker did its job!")

Sequential vs multi-threaded processing

import threading

import time

def worker(x):

tid = threading.currentThread().name

# do some hard and time consuming work:

time.sleep(2)

print(f"Worker {tid} is working with {x}")

#################################################

# Sequential Processing:

#################################################

t = time.time()

worker(42)

worker(84)

print("Sequential Processing took:",time.time() - t,"\n")

#################################################

# Multithreaded Processing:

#################################################

tmulti = time.time()

tr1 = threading.Thread(target=worker, args=(42,))

tr2 = threading.Thread(target=worker, args=(82,))

tr1.start();tr2.start()

tr1.join(); tr2.join()

print("Multithreaded Processing took:",time.time() - tmulti)

You can enjoy the speed of multithreading in Python, if the threaded workers are not CPU intensive.

GIL - the Global Interpreter Lock

- GIL is a mechanism which prevents simultaneous working of multiple thread. So, Python's GIL prevents the "real" parallel multitasking mechanism, and instead implements a cooperative and preemptive multitasking.

- GIL @wiki.python.org

The GIL effect - example

- In CPU intensive task, multithreading is slower than sequential single-thread processing!

import threading

import time

max_range = 10_000_000

max_range_half = max_range//2

def worker(r):

tid = threading.currentThread().name

# do some hard and time consuming work:

global result

res = 0

for i in r:

res += i

result += res

print("Worker {tid} is working with {r}")

#################################################

# Sequential Processing:

#################################################

t = time.time()

result = 0

worker(range(max_range_half))

worker(range(max_range_half, max_range))

print("Sequential Processing result: ", result)

print("Sequential Processing took:",time.time() - t,"\n")

#################################################

# Multithreaded Processing:

#################################################

t = time.time()

result = 0

tr1 = threading.Thread(target=worker, args=(range(max_range_half),))

tr2 = threading.Thread(target=worker, args=(range(max_range_half,max_range),))

tr1.start();tr2.start()

tr1.join(); tr2.join()

print("Multithreaded Processing result: ", result)

print("Multithreaded Processing took:",time.time() - t,"\n")